Access, prepare, blend, and analyze data with ease.

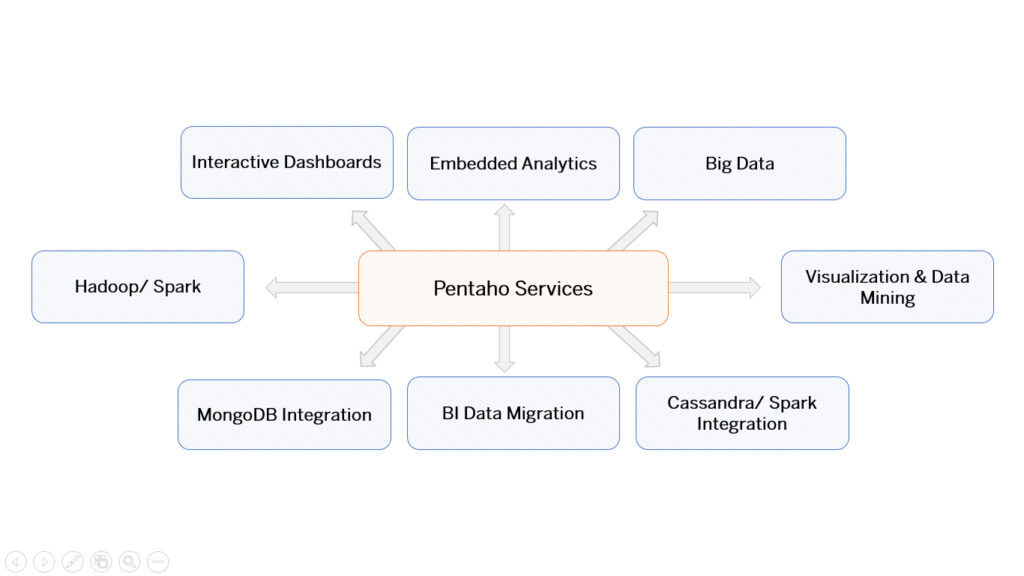

A complete set of services and suites for BI, Analytics, Big Data, and Data Integration.

Setting-up Big Data Environment

- Implementation of Big Data Hadoop distributions: MapR, Cloudera, Hortonworks

- Implementation of Big data enabled databases : MongoDB, InfiniDB, AWS Redshift, HPCC, etc.,

- Big Data Hadoop implementation with Pentaho

- Real time Analytics with Big Data

- Hadoop Consulting

- Engineering capabilities to drive the Big Data initiatives with global customers

- Packaged offerings to ensure successful big data deployments entire life cycle

Stress Performance Testing

- Stress, Load & Performance testing based on enterprise specific requirements.

- Improve response times within norms

- Sustainability of concurrent users

- Speed under massive load / sudden spikes

Embed Pentaho (OEM)

- Customization and rebranding of User Console

- New feature, or modify an existing functionality

- Custom Plugins

- Google Map Integration

- Custom UI development

- Liferay integration

- Single Sign-on (SSO)

Big Data Analytics

- Process and transform your data into Hadoop without Map reduce

- Render data on our custom visualization and analytical solutions

- Load operational data into Hadoop distribution such as Cloudera, MapR, Hortonworks, or Apache

- Load files to HIVE, HBASE, or HDFS

Business Analytics

- Translate Business use case to an intuitive dashboards

- Create custom scorecards

- Collaberation features and intuitive filters

Data Integration

- Complext and large data integration

- Data Warehouse (DWH) creation

- DWH Capacity Planning

- Data Modelling by domain experts

- Redesign DWH to columnar one

Migration Services

- Make organizations Big Data ready

- Bring down cost by upto 90%

- Migration

- Older Pentaho versions to current version

- From other ETL tools to PDI

System Health-check & Recommendation

- General parameters such as CPU, disk & memory usage plus availability quick-check

- Audit related data plus database and report layout bottlenecks

- PDI related types of database connections – Native v/s ODBC

- Application server related detailed checks

- Review of the current deployment architecture

- Review of security framework – authentication and authorization

Setting-up High Availability Multi-clustered Environment

- Perform classic “divide and conquer” processing of data sets in parallel.

- Java-based data integration engine with the MapRHadoop cache/ MapReduce task across all Hadoop cluster nodes

- Use parallel processing and high availability of Hadoop.

- Multi-tenant environment.

- Architecture Workshop

- Implementation of Multi-tenanted SaaS offering on cloud

- Development of Pentaho extensions and plug-ins for multi-tenancy support

- Data modelling for multi-tenancy

Setting-up Cloud Environment

- BI and DWH setup and maintenance in AWS and Rackspace

- Setting up Content Delivery Network for AWS and Rackspace cloud offering

- Production Monitoring

Interested? Let's get in touch!

Book a free consultation with one of our experts to take your business to the next level!